Fair Max Cover

- Collaboration:

- This is a joint project with Dr. Bhaskar DasGupta (project lead), Dr. Tanya Berger-Wolf, and Dr. Anastasios Sidiropoulos.

- Publications:

- Abolfazl Asudeh, Tanya Berger-Wolf, Bhaskar DasGupta, Anastasios Sidiropoulos. Maximizing coverage while ensuring fairness: a tale of conflicting objective. Algorithmica, 2022, Springer.

- Abstract:

-

The abundance of data, along with recent advancements in computation, has enabled precise algorithmic decisions that could foster just and prosperous societies. Yet, as beneficial as these can be, irresponsible implementation of such algorithms can harm societies and individuals at an unprecedented scale. A large number of social policy decisions can be modeled as instances of the maximum coverage problem where, given a universe of elements and a collection of sets, the goal is to select

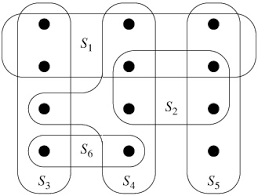

ksets that maximize the number of elements covered by them. Unfortunately, in societal applications, due to the impact of historical biases in the underlying data and reality itself, solely optimizing for coverage can lead to unfair selections that can harm specific minorities. In this project, We introduce the fair maximum coverage problem for addressing this issue. We study not only the computational complexity of the problem but also the complexity of finding a valid solution for it. We provide a relaxed version of the problem that allows solutions with anε-approximationon the fairness constraint. Next, proving an inapproximability for the problem for deterministic polynomial algorithms, we design a randomized approximation algorithm with guarantees on approximation on the disparity ratio.[code]

Algorithmic decision making is changing both small personal choices and big policy decisions. Recent advancements in computation, along with the abundance of big data, have provided a unique opportunity to make wise and precise decisions that foster just and prosperous societies. However, careless development of data-driven tools can not only fail to help but may create obstacles at an unprecedented scale. Unfortunately, algorithmic fairness has become a concern as these tools constantly fall short in satisfying their promise of efficiency and impartiality. Fortunately, following this, there has been an emerging research direction on algorithmic fairness, mainly in AI, focusing on ensuring that the outcomes of machine learning models are fair, for some definition of fairness. Despite this extensive research, fairness in many aspects of algorithmic data mining has not yet been well-explored.

Making selections that either directly or indirectly impact different groups of people is a task that is increasingly often done with the help of data. Such decisions range from the choice of hospitals to provide certain medical services to choosing social datasets for training predictive models. A sizable set of these problems can be viewed as special cases of the traditional maximum coverage (MC) problem. In such settings, one aims to make a limited number of selections, from different available options, each benefiting different communities or groups of people. The objective is to maximize the number of people that receive the benefit, or, in other words, to maximize the ``coverage''.

Unfortunately, historical discrimination, biases, and stereotypes against minorities have biased the society and the data. For example, the city of Chicago has been segregated as a result of redlining. This has an effect on many aspects of society, as seen through the lens of data, such as, for example, crime-rate. As a result, blindly optimizing for coverage, without considering the societal impacts can result in biased algorithms that are, for example, racist or sexist, or hurt marginalized people. Consider the city of Chicago as an example: police want to maximize coverage over the number crimes it can ``quickly'' respond to. Using Chicago crime-rate data, the police end up being more present in certain areas, which are heavily biased towards minority communities. More police presence in those areas increases the arrest chance for individuals living there; the higher arrest rate (playing as a proxy for crime rate) results in even more police presence in those neighborhoods, and this ill cycle keeps increasing the racial bias gap in the city.

The wide range of problems modeled as instances of MC underscore the importance of designing responsible algorithms that, while optimizing for coverage, also guarantee fairness. In this project, we formulate the problem as Fair Maximum Coverage (FMC), based on the notion of demographic parity. We propose complexity analysis and approximation algorithms for the problem under different settings.