94.8% compared with the second best method. OmniFair also achieves similar running time to preprocessing methods, and is up to 270x faster than in-processing methods.

Machine learning (ML) algorithms, in particular classification algorithms, are increasingly being used to aid decision making in every corner of society. There are growing concerns that these ML algorithms may exhibit various biases against certain groups of individuals. For example, some ML algorithms are shown to have bias against African Americans in predicting recidivism, in NYPD stop-and-frisk decisions, and in granting loans. Similarly, some are shown to have bias against women in job screening and in online advertising. ML algorithms can be biased primarily because the training data these algorithms rely on may be biased, often due to the way the training data are collected.

Due to the severe societal impacts of biased ML algorithms, various research communities are investing significant efforts in the general area of fairness --- two out of five best papers in the premier ML conference ICML 2018 are on algorithmic fairness, the best paper in the premier database conference SIGMOD 2019 is also on fairness, and even a new conference ACM FAccT (previously FAT*) dedicated to the topic has been started since 2017. One commonly cited reason for such an explosion of efforts is the lack of an agreed mathematical definition of a fair classifier. As such, many different fairness metrics have been proposed to determine how fair a classifier is with respect to a ``protected group'' of individuals (e.g., African-American or female) compared with other groups (e.g., Caucasian or male), including statistical parity, equalized odds, and predictive parity.

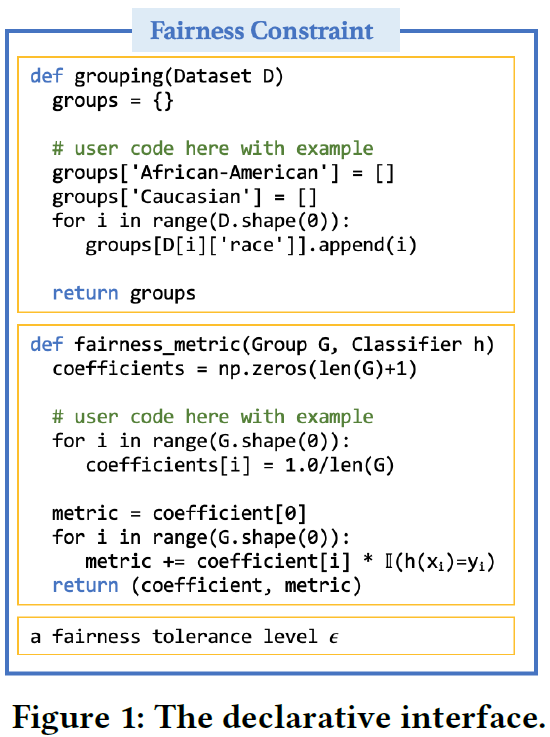

1. Declarative Group Fairness. Current algorithmic fairness techniques are mostly designed for particular types of group fairness constraint. In particular, preprocessing techniques often only handle statistical parity. While in-processing techniques generally support more types of constraints, they often require significant changes to the model training process. OMNIFAIR is able to support all the commonly used group fairness constraints. In addition, OMNIFAIR features a declarative interface that allows users to supply future customized fairness metrics. As shown in the following figure, a fairness specification in OMNIFAIR is a triplet (g, f, epsilon) with three components: (1) a grouping function g to specify demographic groups; (2) a fairness_metric function f to specify the fairness metric to compare between different groups; and (3) a value epsilon to specify the maximum disparity allowance between groups.

Given a dataset D, a chosen ML algorithm A (e.g., logistic regression), and a fairness specification (g, f, epsilon), OMNIFAIR will return a trained classifier h that maximizes accuracy on D and, at the same time, ensures that, for any two groups g_i and g_j in D according to the grouping function g, the absolute difference between their fairness metric numbers according to the fairness_metric function f is within the disparity allowance epsilon.

Figure 1 shows an example of constraint specification using our interface. Our interface can support not only all common group fairness constraints but also customized ones, including customized grouping functions such as intersectional groups and customized fairness metrics.

1. Declarative Group Fairness. Current algorithmic fairness techniques are mostly designed for particular types of group fairness constraint. In particular, preprocessing techniques often only handle statistical parity. While in-processing techniques generally support more types of constraints, they often require significant changes to the model training process. OMNIFAIR is able to support all the commonly used group fairness constraints. In addition, OMNIFAIR features a declarative interface that allows users to supply future customized fairness metrics. As shown in the following figure, a fairness specification in OMNIFAIR is a triplet (g, f, epsilon) with three components: (1) a grouping function g to specify demographic groups; (2) a fairness_metric function f to specify the fairness metric to compare between different groups; and (3) a value epsilon to specify the maximum disparity allowance between groups.

Given a dataset D, a chosen ML algorithm A (e.g., logistic regression), and a fairness specification (g, f, epsilon), OMNIFAIR will return a trained classifier h that maximizes accuracy on D and, at the same time, ensures that, for any two groups g_i and g_j in D according to the grouping function g, the absolute difference between their fairness metric numbers according to the fairness_metric function f is within the disparity allowance epsilon.

Figure 1 shows an example of constraint specification using our interface. Our interface can support not only all common group fairness constraints but also customized ones, including customized grouping functions such as intersectional groups and customized fairness metrics.

2. Example Weighting for Model-Agnostic Property. The main advantage of preprocessing techniques is that they can be used for any ML algorithm A. The model-agnostic property of preprocessing techniques is only possible when they limit the supported fairness constraints to those that do not involve both the prediction h(x) and the ground-truth label y (i.e., statistical parity).

Our system not only supports all constraints current in-processing techniques support, but also does so in a model-agnostic way.

Our key innovation to achieve the model-agnostic property is to translate the constrained optimization problem (i.e., maximizing for accuracy subject to fairness constraints) into a weighted unconstrained optimization problem (i.e., maximizing for weighted accuracy).

3. Supporting Multiple Fairness Constraints. While existing fairness ML techniques already support fewer types of single group fairness constraints than OMNIFAIR. In practice, users may wish to enforce multiple fairness constraints simultaneously. While some in-processing techniques theoretically can support these cases, it is practically extremely difficult to do so, as each fairness constraint is hard-coded as part of the constrained optimization training process. Our system can easily support multiple fairness constraints without any additional coding.

- Hantian Zhang, Xu Chu, Abolfazl Asudeh, Shamkant Navathe. OmniFair: A Declarative System for Model-Agnostic Group Fairness in Machine Learning. SIGMOD, 2021, ACM.

- (Workshop paper) Hantian Zhang, Nima Shahbazi, Xu Chu, Abolfazl Asudeh. FairRover: Explorative Model Building for Fair and Responsible Machine Learning. DEEM@SIGMOD, 2021, ACM.

- Xu Chu, Georgia Tech

- Hantian Zhang, Georgia Tech

- Nima Shahbazi, UIC

Data-driven decision making plays a significant role in modern societies by enabling wise decisions and to make societies more just, prosperous, inclusive, and safe. However, this comes with a great deal of responsibilities as improper development of data science technologies can not only fail but make matters worse. Judges in US courts, for example, use criminal assessment algorithms that are based on the background information of individuals for setting bails or sentencing criminals. While it could potentially lead to safer societies, an improper usage could result in deleterious consequences on people's lives. For instance, the recidivism scores provided for the judges are highly criticized as being discriminatory, as they assign higher risks to African American individuals.

Machine learning (ML) is at the center of data-driven decision making as it provides insightful unseen information about phenomena based on available observations. Two major reasons of unfair outcome of ML models are Bias in training data and Proxy attributes. The former is mainly due to the inherent bias (discrimination) in the historical data that reflects unfairness in society. For example, redlining is a systematic denial of services used in the past against specific racial communities, affecting historical data records. Proxy attributes on the other hand, are often used due to the limited access to labeled data, especially when it comes to societal applications. For example, when actual future recidivism records of individuals are not available, one may resort to information such as ``prior arrests'' that are easy to collect and use it as a proxy for the true labels, albeit a discriminatory one.

A new paradigm of fairness in machine learning has emerged to address the unfairness issues of predictive outcomes. These work often assume the availability of (possibly biased) labeled data in sufficient quantity. When this assumption is violated, their performance degrades. In many practical societal applications one operates in a constrained environment. Obtaining accurate labeled data is expensive, and could only be obtained in a limited amount. Training the model by using the (problematic) proxy attribute as the true label will result in an unfair model.

Our goal is to develop efficient and effective algorithms for fair models in an environment where the budget for labeled data is restricted. An obvious baseline is to randomly select a subset of data (depending on the available budget), obtain their labels, and use it for training. However, a more sophisticated approach would be to use an adaptive sampling strategy. Active learning is a widely used strategy for such a scenario. It sequentially chooses the unlabeled instances where their labeling is the most beneficial for the performance improvement of the ML model.

In this paper, we develop an active learning framework that will yield fair(er) models. Fairness has different definitions and is measurable in various ways. Specifically, we consider a model fair if its outcome does not depend on sensitive attributes such as race or gender.

- Hadis Anahideh, Abolfazl Asudeh, Saravanan Thirumuruganathan. Fair Active Learning. Expert Systems with Applications, 2022, Elsevier.

- Hadis Anahideh, University of Illinois Chicago

- Saravanan Thirumuruganathan