Fairness-aware Query Answering

- Collaboration:

- This is a joint project with the DBXLAB@UTA.

- Publications:

- [1] Suraj Shetiya, Ian Swift, Abolfazl Asudeh, Gautam Das. Fairness-Aware Range Queries for Selecting Unbiased Data. ICDE, 2022.

- Abstract:

- Since an algorithm is only as good as the data it works with, biases in the data can significantly amplify unfairness issues. Our vision in this project is towards integrating fairness conditions into database query processing and data management systems.

In the era of big data and advanced computation models, we are all constantly being judged by the analysis, algorithmic outcomes, and AI models generated using data about us. Such analysis are valuable as they assist decision makers take wise and just actions. For example, the abundance of large amounts of data has enabled building extensive big data systems to fight COVID-19, such as controlling the spread of the disease, or in finding effective factors, decisions, and policies. Similar examples can be found in almost all corners of human life including resource allocation and city policies, policing, judiciary system, college admission, credit scoring, breast cancer prediction, job interviewing, hiring, and promotion, to name a few. In particular, let us consider the following as a running example:

Looking at these analyses through the lens of fairness, algorithmic decisions look promising as they seem to eliminate human biases. However, ``an algorithm is only as good as the data it works with''. In fact, the use of data in all aforementioned applications have been highly criticised for being discriminatory, racist, sexist, and unfair. Probably the main reason is that real-life social data is almost always ``biased''. Using biased data for algorithmic decisions create fairness dilemmas such as impossibility and inherent trade-offs of fairness. Besides historical biases and false stereotypes reflected in data, other sources such as selection bias can amplify unfairness issues. To highlight a real example, let us continue with Example 1:

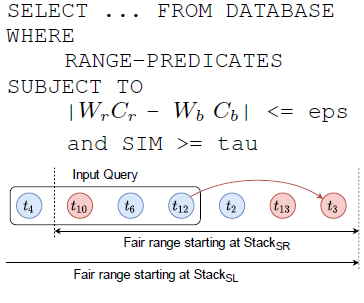

Despite extensive efforts within the database community, there is still a need to integrate fairness requirements with database systems. To address this need, as our first attempt, we consider range queries and pay attention to the facts: (i) the conditions in the range query may be selected intuitively by the human user. For instance, in Example 1 the user could have chosen $65K as the query bound because it was (roughly) a good choice that would make sense for them; (ii) considering the ethical obligations and consequences, the user might be interested in accepting a ``similar enough'' query to their initial choice, if it returns a ``fair'' outcome.

In Example 1, we note that the company could, for instance, in a post-query processing step, remove some male employees from the selected group, or it could add some females to the selected pool, even though they do not belong to the query result. While such fixes are technically easy, those are illegal in many jurisdictions, because those amount to disparate-treatment discrimination: ``when the decisions an individual user receives change with changes to her sensitive attribute information''. For instance, one cannot simply increase or decrease the grade of a student, because of their race or gender. Instead, they should design a ``fair rubric'' that is not discriminatory. Therefore, instead of practicing disparate treatment, we propose to adjusting the range to find a range (similar to finding a rubric for grading) with a fair output. Our system allows the user to specify the fairness and similarity constraints (in a declarative manner) along with the selection conditions, and we return an output range that satisfies these conditions. To further clarify this, let us continue with Example 1 in the following.

Our system provides an alternative to the initial query provided by the user. This is useful since often the choice of filtering ranges is ad-hoc, hence our system helps the user responsibly tune their range. If the discovered range is not satisfactory to the user, they can change the fairness and similarity requirements and explore different choices until they select the final result in a responsible manner.