Given support from the hypervisor, virtual machines (VM) running on the same virtual machine monitor can communicate through shared memory, in addition through network. Xen provides the grant table mechanism, a per-VM (domain) handle table that Xen actively maintains. Each entry points to a machine page that can be shared between domains. In addition, Xen event channels–an asynchronous notification mechanism–can be associated with the shared memory region. A VM can choose either polling or being notified on shared memory communication.

This shared memory (Xen grant table) plus asychronous notification (Xen event channel) setup has been seen quite commonly in Xen paravirtualization interface. For instance, it is used Xen console (DomU - Dom0), Xen Store (Domu - Dom0), and networking (front end - back end). One could setup shared-memory based communication between two random (unpriviledged) domains use the exact same mechanism. That is, rely on some directory service (Xen Store) for peer discovery and set up the data structure (e.g. a ring buffer) for communication. Sending and receiving is done by copying data to and from the shared memory. This is what Xen’s libvchan does.

libvchan origiated from Qubes project, mainly used for inter-domain communication. It later got adopted by Xen and has been substrantially rewritten since. libvchan out-performs the original version in Qubes, and is the default after Qubes 3.0.

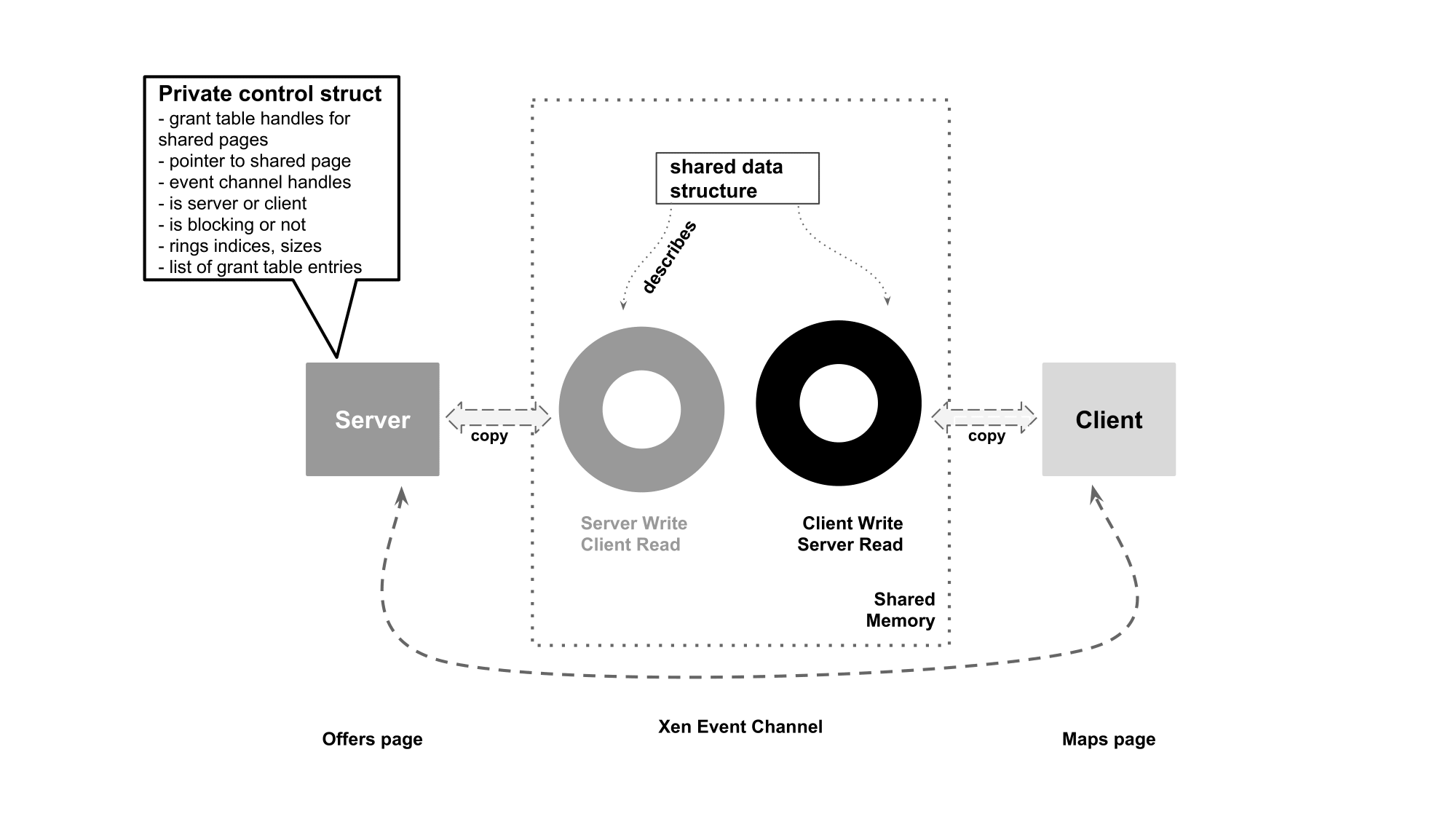

Shared memory communication on libvchan involves two parties–a server and a client. Server offers the memory used for communication, and advertises its credentials (grant ref(s) and event channel) in the directory service Xen Store provides. Assuming the client knows the server’s domain id and service path, client picks that information from the Xen Store and maps the server’s offering into its own virtual address space. After performing the rendezvous, they can start to talk to each other.

Communication happens on separate rings. Server and client each has their own dedicated ring buffer for writes so they won’t race against concurrent writes. Server reads from client’s ring buffer and vise versa. The credentials for each ring is maintained in a shared control data structure.

Sharing is based on page granuality. libvchan allows user to specify the size of the ring, and it is bounded by the grant table size limit for each domain. Server and client can choose their behavior to be blocking or non-blocking. Figure 2 shows an overview of communication setup using libvchan.

libvchan provides two sets of interfaces for streaming-based communication as well as packaet-based one. We only concern about packet-based interface in this document.

Below is the exhaustive list of libvchan interfaces.

This section describes how libvchan performs its operations.

In order to support libvchan on Ethos, we need to provide operating system dependant hooks for Xen-PV operations below:

Normally this is done by providing OS hooks in individual libraries under xen’s xc interface, i.e. libxenevtchn, libxengnttab, libxenstore. By doing so, libvchan can be used in user space. We don’t need to support this semantics in Ethos, so we can short-circuit by directly plug-in Ethos kernel functions to implement desired operations.

Xen libraries wrap around a layer that operates on “handles”, an indirection of the above-mentioned PV operations. The hanlde is essentially a file descriptor that indexes to the file descriptor table. In order to port libvchan, we need to support this abstraction by operating on the integral handles.

The operations on event channel handle are:

The Xen event channel related operations are:

Similar to Xen event channel operations, grant table library operates on grant table “handles”–one layer above the raw grant table interface. The operations on Xen grant table hanldes are:

The rest of grant table operations can be divided into two sets: the server side which offers the physical backing memory for sharing and advertises the shared memory, and the client side which takes the advertised credentials and maps the shared memory.

Server side operations are:

Client side operations are:

Xen Store is the directory service that is used for server advertising and client rendezvousing. Server writes the credentials associated with a vchan to Xen Store, and client reads them off.

Xen Store operations also happen on a handle. The handle related operations are:

The Xen Store operations are:

In addition, we also need to provide:

Also, low-level operations to modify fields atomically in shared data structure: