© Mark Grechanik, 2016

Products

ICST 2016

Program

ICST 2016

Conference Program

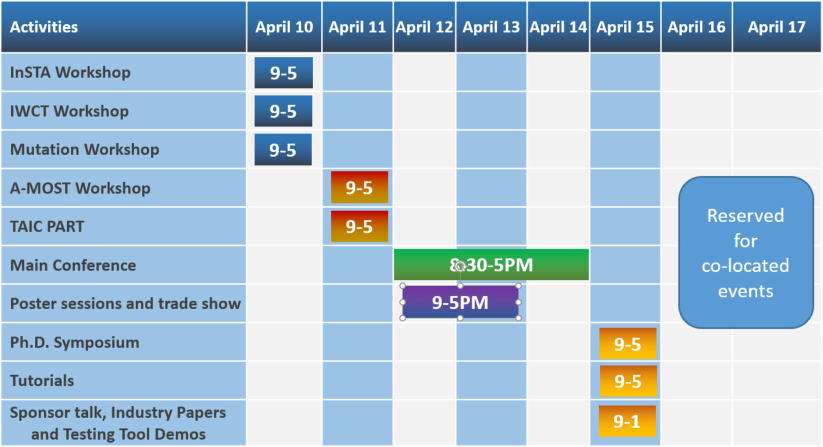

Preliminary Schedule at a Glance

April 10 (Sunday) April 10 (Sunday) The 3rd International Workshop on Software Test Architecture (InSTA) Held in the conference room Shakespeare House on the 14th floor. Organizers: - Yasuharu Nishi, The University of Electro-Communications, Japan - Satomi Yoshizawa, NEC Corporation, Japan - Satoshi Masuda, IBM Research, Japan URL: http://aster.or.jp/workshops/insta2016 Description: Designing better software test architectures is important for software testing. The software test architecture is a key to the test strategy. In software testing, there are various keywords for test design concepts. However, there are no widely standardized diagrams or notations to communicate the test design concepts, to increase the productivity and reusability of tests by raising their levels of abstraction, to generally grasp the overall perspective of the software for testing it. By focusing on the higher concepts of test architectures, our discussions can raise the quality of the testing. Test architectures must be approached indirectly as a part of the test strategies. Some organizations are working to establish new ways to design novel test architectures, but there is no unified understanding of the key concepts of test architectures. In this InSTA workshop, researchers and practitioners will discuss their researches, industrial experiences and emerging ideas about software test architectures. The topics are concepts of test architectures like as abstraction of test cases, design of test architecture as application or enhancement of existing notation UML and UTP, test requirement analysis like as patterns for test requirement analysis, application of test architecture as quality evaluation of test architecture and other software testing related topics. ————— The 5th International Workshop on Combinatorial Testing (IWCT 2016) Held in the conference room Steamboat Hotel on the 14th floor. Organizers: - Angelo Gargantini, University of Bergamo, Italy - Rachel Tzoref-Brill IBM Research - Haifa, Israel URL: http://iwct2016.unibg.it Description: The 5th International Workshop on Combinatorial Testing (IWCT) focuses on combinatorial testing, a widely applicable generic methodology and technology for software verification and validation. In a combinatorial test plan, all interactions between parameters up to a certain level are covered. For example, in the special case of pairwise testing, for every pair of parameters, every pair of values will appear at least once. Studies show that CT is more efficient and effective than random testing and expert test selection methods. CT has gained significant interest in recent years, both in research and in practice. However, many issues remain unresolved, and much research is still needed in the field. In this workshop, we plan to bring together researchers actively working on combinatorial testing and create a productive and creative environment for sharing and collaboration. The workshop will also serve as a meeting place between academia and industry, uniting academic excellence with industrial experience and needs. This will allow participants from academia to learn about the industrial experience in practical applications of CT to real-life testing problems. Industrial participants will have opportunities to meet the leading scientists in the field and learn of the latest advances and innovations. ————— Mutation Analysis (Mutation 2016) Held in the conference room Merchants Hotel on the 14th floor. Organizers: - René Just, University of Washington, USA - Jens Krinke, University College London, UK - Christopher Henard, University of Luxembourg, Luxembourg URL: https://sites.google.com/site/mutation2016 Description: Mutation is acknowledged as an important way to assess the fault-finding effectiveness of tests. Mutation testing has mostly been applied at the source code level, but more recently, related ideas have also been used to test artifacts described in a considerable variety of notations and at different levels of abstraction. Mutation ideas are used with requirements, formal specifications, architectural design notations, informal descriptions (e.g., use cases) and hardware. Mutation is now established as a major concept in software and systems V&V and uses of mutation are increasing. Mutation 2016 is the 11th in the series of international workshops focusing on mutation analysis. The workshop will be held in conjunction with the International Conference on Software Testing, Verification, and Validation (ICST 2016). Accepted papers will be published as part of the ICST proceedings. The goal of the Mutation workshop is to provide a forum for researchers and practitioners to discuss new and emerging trends in mutation analysis. ————— April 11 (Monday) Advances in Model Based Testing (A-MOST 2016) Held in the conference room Merchants Hotel on the 14th floor. Organizers: - Mike Papadakis, University of Luxembourg, Luxembourg - Gilles Perrouin, University of Namur, Belgium - Shaukat Ali, Simula Research Laboratory, Norway URL: https://sites.google.com/site/amostw2016 Description: Model Based Testing (MBT) continues to be an important research area for complex systems in industry, by easing comprehension and increasing efficiency of test generation and automation. The goal of the A-MOST workshop is to bring together researchers and practitioners to discuss state of the art, practice and future prospects. ————— Testing: Academia-Industry Collaboration, Practice and Research Techniques (TAIC PART) Held in the conference room Steamboat Hotel on the 14th floor. Organizers: - Rudolf Ramler, Software Competence Center Hagenberg GmbH, Austria - Darko Marinov, University of Illinois at Urbana-Champaign, IL, USA - Takashi Kitamura, National Institute of Advanced Industrial Science and Technology (AIST), Japan - Michael Felderer, University of Innsbruck & QE LaB Business Services, Austria URL: http://www2016.taicpart.org Description: Software testing is the perfect candidate among software engineering activities for the union of academic and industrial minds. The workshop Testing: Academia-Industry Collaboration, Practice and Research Techniques (TAIC PART) is a unique event that provides a stimulating platform that facilitates collaboration between industry and academia on challenging and exciting problems of real-world software testing. The workshop brings together practitioners and academic researchers in a friendly environment with the goal to transfer knowledge, exchange experiences, and enrich the understanding of the opportunities and challenges in the collaboration between the two sides. Contributions to TAIC PART can range from the articulation of research questions in the field of software quality engineering, testing and analysis to the discussion of practical challenges faced in industry. The common theme is the advancement of approaches and methods for sustainable collaboration between academia and industry in software testing. April 12 (Tuesday): The Main Conference Starts Held in Sauganash Ballroom on the 14th floor. 8:00am to 8:30am: Breakfast and Registration 8:30am - 8:45am: Opening the conference 8:45am - 9am: ASTQB sponsor talk by Dr. Tauhida Parveen. 9am-10am: Keynote talk by Prof. Betty Cheng 10am - 10:30am: Coffee Break 10:30am to 12:30pm: Session Chair: Benoit Baudry. Session 1: Test generation. 1. Eduard Paul Enoiu, Adnan Causevic, Daniel Sundmark and Paul Pettersson. A Controlled Experiment in Testing of Safety-Critical Embedded Software 2. Itai Segall. Repeated Combinatorial Test Design - Unleashing the Potential in Multiple Testing Iterations 3. Ruoyu Gao, Zhen Ming Jack Jiang, Cornel Barna and Marin Litoiu. A Framework to Evaluate the Effectiveness of Different Load Testing Analysis Techniques 4. Kevin Moran, Mario Linares-Vásquez, Carlos Bernal-Cárdenas, Christopher Vendome and Denys Poshyvanyk. Automatically Discovering, Reporting and Reproducing Android Application Crashes 12:30PM to 1:30PM - Lunch 1:30pm to 3:30pm: Session Chair: TingTing Yu. Session 2: Constraint solving and search 1. Daniel Liew, Alastair Donaldson and Cristian Cadar. Symbooglix: A Symbolic Execution Engine for Boogie Programs 2. Hong Lu, Tao Yue, Shaukat Ali and Li Zhang. Integrating Search and Constraint Solving for Nonconformity Resolving Recommendations of System Product Line Configuration 3. Bogdan Marculescu, Robert Feldt and Richard Torkar. Using Exploration Focused Techniques to Augment Search-Based Software Testing: An Experimental Evaluation 4. August Shi, Alex Gyori, Owolabi Legunsen and Darko Marinov. Detecting Assumptions on Deterministic Implementations of Non-deterministic Specifications 3:30pm to 4PM - Coffee break. 4pm to 6pm: Session Chair: Sudipto Ghosh. Session 3: Debugging 1. Tao Ye, Lingming Zhang, Linzhang Wang and Xuandong Li. An Empirical Study on Detecting and Fixing Buffer Overflow Bugs 2. Paolo Arcaini, Angelo Gargantini and Paolo Vavassori. Automatic Detection and Removal of Conformance Faults in Feature Models 3. Wided Ghardallou, Nafi Diallo, Marcelo Frias and Ali Mili. Debugging Without Testing 4. Shih-Feng Sun and Andy Podgurski. Properties of Effective Metrics for Coverage-Based Statistical Fault Localization 6:30PM to 9PM: Poster Session and Trade Show April 13 (Wednesday): The Main Conference Held in Sauganash Ballroom on the 14th floor. 8:00am to 9am: Breakfast and Registration 9am-10am: Keynote talk by Prof. Adam Porter 10am - 10:30am: Coffee Break 10:30am to 12:30pm: Session Chair: Shaukat Ali. Session 4: Concurrency and Performance 1. John Hughes, Benjamin Pierce, Thomas Arts and Ulf Norell. Mysteries of Dropbox: Property-Based Testing of a Distributed Synchronization Service 2. Maryam Abdul Ghafoor, Muhammad Suleman Mahmood and Junaid Haroon Siddiqui. Effective Partial Order Reduction in Model Checking Database Applications 3. Maicon Bernardino, Avelino F. Zorzo and Elder Macedo Rodrigues. Canopus: A Domain- Specific Language for Modeling Performance Testing 4. Tingting Yu, Wei Wen, Xue Han and Jane Huffman Hayes. Predicting Testability of Concurrent Programs 12:30pm to 1:30PM - Lunch 1:30pm to 3:30pm: Session Chair: Mukul Prasad. Session 5: Web applications 1. Mouna Hammoudi, Gregg Rothermel and Paolo Tonella. Why do Record/Replay Tests of Web Applications Break? 2. Sonal Mahajan, Bailan Li, Pooyan Behnamghader and William G.J. Halfond. Using Visual Symptoms for Debugging Presentation Failures in Web Applications 3. Abdulmajeed Alameer, Sonal Mahajan and William G. J. Halfond. Detecting and Localizing Internationalization Presentation Failures in Web Applications 4. Vahid Garousi and Kadir Herkiloğlu. Selecting the right topics for industry-academia collaborations in software testing 3:30pm to 4PM - Coffee break. 4pm to 6pm: Session Chair: Darko Marinov. Session 6: Test analysis 1. Robert Feldt, Simon Poulding, David Clark and Shin Yoo. Test Set Diameter: Quantifying the Diversity of Sets of Test Cases 2. Chen Huo and James Clause. Interpreting Coverage Information Using Direct and Indirect Coverage 3. John Hughes and Thomas Arts. How well are your requirements tested? 4. Yufeng Cheng, Meng Wang, Yingfei Xiong, Dan Hao and Lu Zhang. Empirical Evaluation of Test Coverage for Functional Programs 7pm-10pm: Banquet April 14 (Thursday): The Main Conference Held in Sauganash Ballroom on the 14th floor. 8:00am to 9am: Breakfast and Registration 9am-10am: Keynote talk by Prof. A. Prasad Sistla 10am - 10:30am: Coffee Break 10:30am to noon: Session Chair: Andy Podgurski. Session 7: Regression testing 1. Junjie Chen, Yanwei Bai, Dan Hao, Yingfei Xiong, Hongyu Zhang, Lu Zhang and Bing Xie. A Text-Vector Based Approach to Test Case Prioritization 2. Luís Pina and Michael Hicks. Tedsuto: A General Framework for Testing Dynamic Software Updates 3. Mohammed Al-Refai, Sudipto Ghosh and Walter Cazzola. Model-based Regression Test Selection for Validating Runtime Adaptation of Software Systems noon to 1:30PM - Lunch 1:30pm to 3pm: Session Chair: Mike Papadakis. Session 8: Mutation 1. Donghwan Shin and Doo-Hwan Bae. A Theoretical Framework for Understanding Mutation- Based Testing Methods 2. Eric Larson and Anna Kirk. Generating Evil Test Strings for Regular Expressions 3. Susumu Tokumoto, Hiroaki Yoshida, Kazunori Sakamoto and Shinichi Honiden. MuVM: Higher Order Mutation Analysis Virtual Machine for C 3pm to 3:30PM - Coffee break. 3:30am to 5:30pm: Session Chair: Nan Li. Session 9: Unit testing 1. Shabnam Mirshokraie, Ali Mesbah and Karthik Pattabiraman. Atrina: Inferring Unit Oracles from GUI Test Cases 2. Boyang Li, Christopher Vendome, Mario Linares-Vasquez, Denys Poshyvanyk and Nicholas Kraft. Automatically Documenting Unit Test Cases 3. Dominik Holling, Andreas Hofbauer, Alexander Pretschner and Matthias Gemmar. Profiting from Unit Tests For Integration Testing 4. Teng Long, Ilchul Yoon, Adam Porter, Atif Memon and Alan Sussman. Coordinated Collaborative Testing of Shared Software Components 5:45pm to 6:15pm: Conference closing. 6:20pm - 7pm: Open Steering Committee meeting. April 15 (Friday): Tutorials, Industry Papers, Tool Demos, Doctoral Symposium Doctoral Symposium: 9am-5:30pm Held in the Merchants Hotel conference room on the 14th floor. 9:00 - 10:30 warm up (chair Mauro Pezzè) - Introduction (Mauro Pezzè) - A career in a research institution (Antonia Bertolino) - A career in a academia (Myra Cohen) 11:00 - 12:30 student presentations (chair Paolo Tonella) - 11:00 Francesco Bianchi — Testing Concurrent Software Systems — Università della Svizzera Italiana - 11:20 Emily Kowalczyk — Modeling App Behavior from Multiple Artifacts — University of Maryland - 11:40 Sebastian Kunze — Symbolic Characterisation of Commonalities in Testing Software Product Lines— Halmstad University - 12:00 Bruno Lima — Automated Scenario-based Testing of Distributed and Heterogeneous Systems — University of Porto - 12:20 wrap up 12:30 - 14:00 lunch with individual meetings between students and committee members 14:00 - 15:30 student presentation (chair Andreas Zeller) - 14:00 Daniele Zuddas — Semantic Testing of Interactive Applications — Università della Svizzera Italiana - 14:20 Zebao Gao — Making System User Interactive Tests Repeatable: When and What Should we Control? — University of Maryland - 14:40 Joakim Gustavsson — Verification Methodology for Fully Autonomous Heavy Vehicles — KTH Royal Institute of Technology - 15:00 Rui Xin — Self-Healing Cloud Applications — Università della Svizzera Italiana - 15:20 wrap up 16:00 - 17:30 Closing Remarks (chair Mauro Pezzè) - open discussion Tutorial 1: Parameterized Unit Testing: Theory and Practice Instructors: Tao Xie, University of Illinois at UrbanaChampaign Nikolai Tillmann, Microsoft Pratap Lakshman, Microsoft Time: 9am to 10:30am Summary: With recent advances in test generation research such as dynamic symbolic execution, powerful test generation tools are now at the fingertips of developers in software industry. For example, Microsoft Research Pex, a state of the art tool based on dynamic symbolic execution, has been shipped as IntelliTest in Visual Studio 2015, benefiting numerous developers in software industry. Such test generation tools allow developers to automatically generate test inputs for the code under test, comprehensively covering various program behaviors to achieve high code coverage. These tools help alleviate the burden of extensive manual software testing, especially on test generation. Although such tools provide powerful support for automatic test generation, by default only a predefined limited set of properties can be checked, serving as test oracles for these automatically generated test inputs. Violating these predefined properties leads to various runtime failures, such as null dereferencing or division by zero. Despite being valuable, these predefined properties are weak test oracles, which do not aim for checking functional correctness but focus on robustness of the code under test. Parameterized unit tests serve as strong test oracles to complement these powerful test generation tools in research and practice. This technical briefing presents latest research on principles and techniques, as well as practical considerations to apply parameterized unit testing on real world programs, highlighting success stories, research and education achievements, and future research directions in developer testing. The technical briefing will help improve developer skills and knowledge for writing parameterized unit tests and give overview of tool automation in supporting parameterized unit tests. Attendees will acquire the skills and knowledge needed to perform research or conduct practice in the field of developer testing and to integrate developer testing techniques in their own research, practice, and education. Tutorial 2: Efficient Regression Testing with Ekstazi Instructors: Milos Gligoric, University of Texas at Austin Time: 11am to 12:30pm Summary: Everyone wants to have faster regression testing. Regression testing runs available tests against each commit to check that recent project changes did not break any previously working functionality. The cost of regression testing is exacerbating with the increase in the number of commits per day due to small and frequent changes (which are considered a good practice as they simplify code reviews), and several studies reported that regression testing accounts for more than 50% of the development effort. Regression test selection (RTS) speeds up regression testing by tracking dependencies for each test and skipping to run tests whose dependencies are not changed at the new commit. This tutorial presents Ekstazi (www.ekstazi.org), the first safe and efficient RTS technique for projects written in JVM languages (e.g., Java, Scala, Groovy). Ekstazi is also the first RTS tool adopted (in less than one year) by popular open source projects, including Apache Camel, Apache Commons Math, and Apache CXF, as well as several companies. This tutorial will present technical details behind Ekstazi, several approaches to integrate Ekstazi in the existing projects, and multiple ways to extend Ekstazi to support inhouse build systems or testing frameworks. Additionally, we will present our experience with integrating and running Ekstazi on 615 commits of 32 opensource projects (totaling almost 5M lines of code); Ekstazi reduced the resource consumption by up to an order of magnitude for longer running test suites compared to the time to run the entire test suites. Finally, the tutorial will discuss several potential improvements of Ekstazi. Tutorial 3: Using the EvoSuite Unit Test Generator for Software Development and Research Instructors: Gordon Fraser, The University of Sheffield Jose Miguel Rojas, The University of Sheffield Time: 1:30pm to 5pm Summary: The EVOSUITE tool automatically generates JUnit tests for Java software. Given a class under test, EVOSUITE creates sequences of calls that maximise testing criteria such as line and branch coverage, while at the same time generating JUnit assertions to capture the current behaviour of the class under test. This tutorial is an interactive handson session where participants learn how to use EVOSUITE during software development, as well as for software engineering research. We will cover basic usage on the command line, systematic use for large experimental studies, integration into software development processes and human experiments, and show how to build and extend EVOSUITE. Industry Track Papers: 9:00am to 11:00AM: Held in the Steamboat Hotel conference room on the 14th floor. Chair: Miroslav Velev, Aries Design Automation, U.S.A. 1. Lorin Hochstein. Netflix Corp. Chaos Engineering 2. Hideo Tanida, Tadahiro Uehara, Hidetoshi Kurihara, Peng Li, Mukul Prasad, Indradeep Ghosh. Fujisu Laboratories, Ltd, and Fujitsu Laboratories of America, Inc. Automated Unit Testing for Client Tier of Web Applications through Symbolic Execution 3. Metin Can Ayduran. HERE De GmbH. Testing Data or Code? A preliminary Data Driven Regression Testing Proposal 4. Veena Vaidyanathan. Groupon Inc. How Test Driven Development helped out Software Integration Project Tool Demos: 11:00am to 12:30PM: Held in the Steamboat Hotel conference room on the 14th floor. Chair: Miroslav Velev, Aries Design Automation, U.S.A. 1. Nan Li, Medidata Solutions; Anthony Escalona, Medidata Solutions; Tariq Kamal, Medidata Solutions. Skyfire: Model-Based Testing With Cucumber 2. Andrea Arcuri, Scienta, Norway, and University of Luxembourg, Luxembourg; Jose Campos, University of Sheffield; Gordon Fraser, University of Sheffield. Unit Test Generation During Software Development: EvoSuite Plugins for Maven, IntelliJ and Jenkins 3. Bertrand Stivalet, NIST; Elizabeth Fong, NIST. Large Scale Generation of Complex and Faulty PHP Test Cases 4. Rudolf Ramler, SCCH Hagenberg; Thomas Wetzlmaier, SCCH Hagenberg; Werner Putschögl, Trumpf Maschinen Austria. A Framework for Monkey GUI Testing